Paper Summary: Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition

This is a summary of an important paper explaining spatial pyramid pooling, a technique used in CNN architectures that is said to help with shift invariance in classification tasks.

Review: what is a pooling layer?

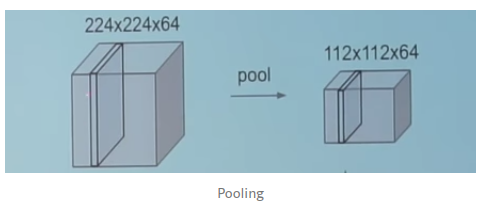

The pooling layer is a downsampling operation, typically applied after a convolution layer. In particular max and average pooling are special kinds of pooling.

The function of a pooling layer is to progressively reduce the spatial size of the representation to reduce the amount of parameters and computation in the network. Pooling layer operates on each feature map independently.

For example, if we apply some sort of pooling to 64 224x224 intermediate feature representations of an image, we can end up with 64 112x112 feature representations of said image.

[ ] –> [ ]

224x224x64 –> 112x112x64

64 filters –> post-pooling

if stride =2, then the size of the filter is reduced by a factor of 2.

Why use SPP?

size restriction : Why? The sliding windows used in the convolutional layers can actually cope with any image size, but the fully-connected layers have a fixed sized input by construction. It is the point at which we transition from the convolution layers to the fully-connected layers that imposes the size restriction. As a result images are often cropped or warped to fit the size requirements of the network, which is far from ideal.

The job of the spp layer (which is found between the convolution and the fully connected layers), is to map any size input down to a fixed size input.

What is SPP?

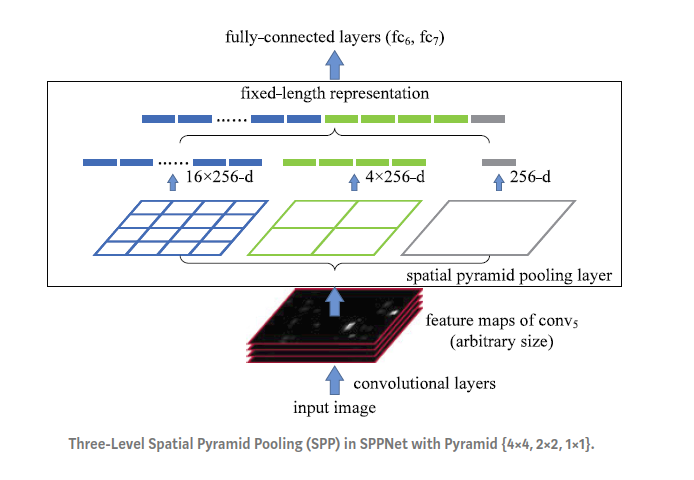

Divides the feature maps outputs (from the Conv Layers) into a number of spatial bins with sizes proportional to the image size, so that the number of bins is fixed regardless of the image size.

Example: one layer of 16 bins dividing the image into a 4x4 grid, another layer of of 4 bins dividing the image into a 2x2 grid, and a final layer comprising the whole image. In each spatial bin, the responses of each filter are simply pooled using max pooling.

Paper Summary

Summary:

Current CNNs require a fixed-size of input images. SPP is another pooling strategy that eliminates said requirement.

This new architecture can generate a fixed-length represenation regardless of image size/scale.

Pyramid pooling is also robust to object deformations.

(faster than R-CNN)

“Using SPP_net, we compute feature maps from the entire image only once, and then pool features in arbitrary regions (sub-images) to generate fixed-length representations for training the detectors.”

Intro:

The requirement that input images are always the same size limits both the aspect ratio and the scale of the input image. (cropping or warping). cropping may result in the image not containing the object, and warping may result in geometric distortion.

Why is there a shape requirement? Convolutions can produce feature maps using a sliding window and with an output of any size. However, the Fully connected layers that receive the feature maps from the convolutional layers, by definition require a fixed input size.

We can add an SPP layer on top of the last convolutional layer. This pools the features and generates fixed-length outputs which are then fed into the FC layers.

In other words, the information “aggregation” happens at a deeper stage of the network.

Change in architecture:

-

[image] –> [crop/warp] –> [Conv Layers] –> [FC Layers] –> output

-

to

-

[image] –> [Conv Layers] –> [spatial pyramid pooling] –> [FC Layers] –> output

What is SPP?

(an extension of the bag of words model)

- Partitions the image into divisions from finer to coarser levels and aggregates local features in them.

What are coarsers/finer levels of the image? “When you reduce an image, you get an image at a coarser scale, while the original is the finer scale: because in the big image there are fine details and in the small image, only coarser details.”

SPP uses these multi-level spatial bins, while the sliding window pooling uses inly a single window size.

Extra: Training with variable-size images increases scale-invariance and reduces over-fitting.

How does the pooling work?

https://www.cs.utexas.edu/~grauman/papers/grauman_darrell_iccv2005.pdf

The classifiers (FC layers) require fixed-length vectors. Such vectors can be generated by the Bag-of-Words (BoW) approach that pools the features together. Spp can maintain spatial information by pooling in local spatial bins. The spatial bins have sizes proportional to the iamge size, so the number of bins is fixed reagrdless of the image size (something a sliding window cannot do)

The coarsest pyramid level has a single bin that covers the entire image. This is a “global pooling” operation.

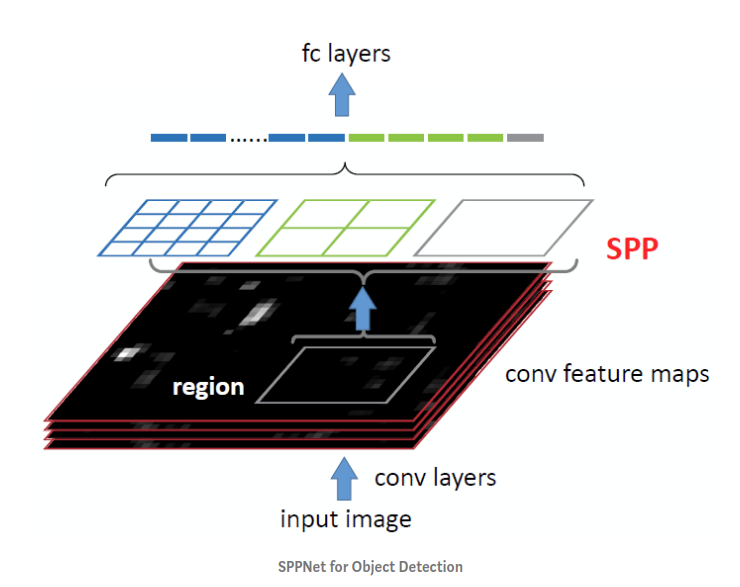

SPP-Net for Object Detection

Paper mostly talks about adapting R-CNN to use a spp-net type of architecture.

Spp-net can also be user for object detection. We extract the feature maps from the entire image only once (possibly at multiple scales). Then we apply spp on each candidate window of the feature maps to pool a fixed-length representation of this window. Runs orders of magnitude faster than traditional R-CNN.